project Betfair, part 2

Table of Contents

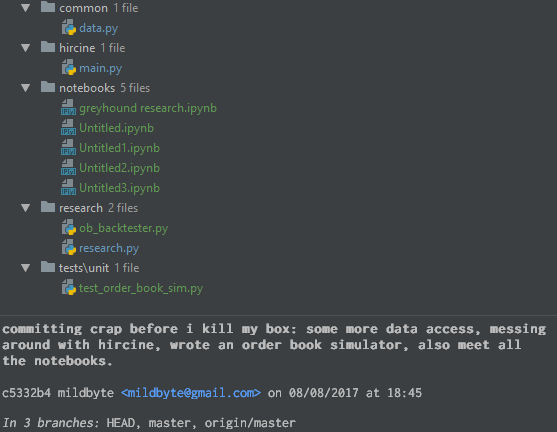

Probably the hardest thing about writing this will be piecing together what I was doing and thinking at the time. Keeping a development diary was a great idea, it's just a shame I started it 1/3 of the way into the project and the rest is in various commit messages.

I would never have been able to get away with these commit messages at my previous job.

In any case, I started with how everything starts: gathering data.

Attempt 1: Betfair API-NG

Good news: Betfair has some APIs. Bad news: it costs £299 to access the API. Better news: I had already gotten an API key back in 2014 when it was free and so my access was maintained.

The first Betfair API I used is called API-NG. It's a REST API that allows to do almost everything the Web client does, including getting a (live or delayed) state of the runner order book (available and recently matched odds), getting information about given markets and runners, placing actual bets or getting the account balance.

Collecting order book data

Since naming things is one of the hard problems in computer science, I decided to pick the Daedric pantheon from The Elder Scrolls to name components of my new trading system, which would be written in Python for now.

- Hermaeus, "in whose dominion are the treasures of knowledge and memory". Hermaeus is a Python script that is given a Betfair market ID and a market suspend time (when the market's outcome becomes known) as well as a sample rate. When started, it repeatedly polls the REST API at the given sample rate to get the order book contents for all runners and dumps those into a PostgreSQL database.

- Meridia, "associated with the energies of living things". Meridia reads the Betfair market catalogue (say, all horse races in the UK happening today, their starting times and market IDs) and schedules instances of Hermaeus to start recording the data a given amount of time from the market getting suspended.

- Hircine, "whose sphere is the Hunt, the Sport of Daedra, the Great Game (which I have just lost), the Chase". Hircine would be the harness for whatever strategy I would decide to run: when executed on a given market, it would read the data collected by Hermaeus and output the desired number of OPCs we wished to hold.

This was pretty much where I paused the design process: downstream of Hircine we would have some sort of a component that would determine whether to place a bet on a given market, but I didn't want to make a decision on what would happen later, since I wanted my further design decisions to be driven by looking at the data and seeing what would and wouldn't work.

I had decided to concentrate on pre-race greyhound and horse markets. Pre-race markets somehow still have dramatic odds movements (despite that there's no information becoming known that would affect the race outcome), however odds don't move by more than 1-2 ticks at a time (unlike football, where a goal can immediately halve the implied probability for some markets, like the Correct Score market). In addition, in-play markets have a bet delay of 1-10 seconds depending on the sports type, whereas for the pre-race markets the submitted bet appears in the order book immediately.

In terms of numbers, there are about 30 horse races in the UK alone on any given day (and those usually are the most liquid, as in, with a lot of bets being available and matched, with action starting to pick up about 30 minutes before the race begins) and about 90 greyhound races (those are less liquid than horses with most of the trading happening in the last 10 minutes before the race begins).

What to do next?

Now, I could either beat other people by making smarter decisions — or I could beat them by making dumb decisions quicker. I quite liked the latter idea: most high-frequency trading strategies are actually fairly well documented, I imagined that "high frequency" on Betfair is much, much slower than high frequency in the real world, and making software faster is better-defined that making a trading model make more money.

And, as a small bonus, trading at higher granularities meant that I didn't need as much data. If my strategy worked by trying to predict, say, which horse would win based on its race history or its characteristics, I would need to have collected thousands of race results before being able to confidently backtest anything I came up with. In a higher frequency world, data collection would be easier: there's no reason why the microstructure of one horse racing market would be different from another one.

The simplest one of those strategies would be market making. Remember me lamenting in the previous post that buying a contract and immediately selling it back results in a ~0.5% loss because of the bid-offer spread? As a market maker, we have a chance of collecting that spread instead: what we do is maintain a both buy (back) and a sell (lay) order in hopes that both of them will get hit, resulting in us pocketing a small profit and not having any exposure, assuming we sized our bets correctly. It's a strategy that's easy to prototype but has surprising depth to it: what if only one side of our offer keeps getting matched and we accumulate an exposure? What odds do we quote for the two bets? What sizes?

With that in mind, I realised that the way I was collecting data was kind of wrong. The idea of the strategy output being the desired position at a given time is easily backtest-able: just multiply the position at each point by the price movement at the next tick and take the cumulative sum. The price in this case is probably the mid (the average of the back and the lay odds) and for our cost model we could assume that we cross the spread at every trade (meaning aggressively take the available odds instead of placing a passive order and waiting to get matched).

Sadly, this doesn't fly with a market-making strategy: since we intend to be trading passively most of the time, we have to have a way to model when (or whether: while we're waiting, the price could move underneath us) our passive orders get matched.

How an order book works

If we have to delve into the details of market microstructure, it's worth starting from describing how a Betfair order book actually works. Thankfully, it's very similar to the way a real exchange order book works.

When a back (lay) order gets submitted to the book, it can either get matched, partially matched, or not matched at all. Let's say we want to match a new back (lay) order N against the book:

- take the orders at the opposite side of the book: lay (back).

- look at only those that have higher (lower) odds than N.

- sort them by odds descending (ascending) and then by order placement time, oldest first.

- for each one of these orders O, while N has some remaining volume:

- if N has more remaining volume than O, there's a partial match: we decrease N's remaining volume by O's volume, record a match and remove O from the book completely.

- if N has less remaining volume than O, we decrease O's remaining volume by N's remaining volume, record a match and stop.

- if both volumes are equal, we remove O from the book, record a match and stop.

- if N has any remaining volume, add it to the order book.

It's easy to see that to get our passive order matched, we want to either offer a price that's better than the best price in the book (highest odds if we're offering a back (laying) or lowest odds if we're offering a lay (backing)) or be the earliest person at the current best price level. One might think of matching at a given price level as a queue: if we place a back at 1.70 (thus adding to the available to lay amount) and there is already £200 available at that level, we have to wait until that money gets matched before we can be matched — unless while we are waiting, the best available to lay odds become 1.69 and so we'll have to cancel our unmatched bet and move it lower.

So how do we use this knowledge to backtest a market-making strategy? The neatest way of doing this that I found was taking the order book snapshots that I collected and reconstructing a sequence of order book events from it: actions that take the order book from one state to the next one. Those can be either back/lay order placements (possibly triggering a match) or cancellations. If these events were to be be plugged back into the order book simulator, we would end up with order book states that mirror exactly what we started with.

However, if we were to also insert some of our own actions in the middle of this stream, we would be able to model the matching of passive orders: for example, if the best available lay at a given point in time was 1.70 and we placed an order at the better odds (for others) at 1.69, all orders in the event stream that would have matched at 1.70 would now be matching against our new order, until it got exhausted. Similarly, if a new price level was about to be opened at 1.69 and we were the first order at that level, all orders would be matching against us as well.

Inferring order book events

So I set out to convert my collected snapshots into a series of events. In theory, doing this is easy: every snapshot has the odds and volumes of available back and lay bets, as well as the total amount traded (matched) at each odds. So, to begin, we take the differences between these 3 vectors (available to back, available to lay, total traded) in consecutive snapshots. If there's no traded difference, all other differences are pure placements and cancellations without matches. So if there's more available to back/lay at a given level, we add a PLACE_BACK/PLACE_LAY instruction to the event stream — and if there's less, we add a CANCEL_BACK/CANCEL_LAY instruction for a given amount. Since we don't know which exact order is getting cancelled (just the volume), the order book simulator just picks a random one to take the volume away from.

Things get slightly more tricky if there's been a trade at a given odds level. If there's only been one order book action between the snapshots, it's fairly easy to infer what happened: there will be a difference in either the outstanding back or lay amounts at those odds, meaning there was an aggressive back/lay that immediately got matched against the order. Sometimes there can be several odds levels for which there is a traded amount change — that means an order was so large that it matched against orders at several odds. After the traded amount differences are reconciled, we need to adjust the available to back/lay differences to reflect that and then proceed as in the previous paragraph to reconcile unmatched placements/cancellations.

There was a problem with the data I collected, however: API-NG limits the number of requests to it to about 5 per second. Worse even, despite that I was making about 3 requests per second, the API would still sometimes throttle me and not give back an HTTP response until a few seconds later. This meant that order book snapshots had more than one event between them and in some cases there were way too many possible valid sets of events that brought the order book from one state to the other.

Thankfully, a slightly more modern way of getting data was available.

Attempt 2: Betfair Exchange Stream API

Unlike its brother, API-NG, the Exchange Stream API is a push API: one opens a TCP connection to Betfair and sends a message asking to subscribe to certain markets, at which point Betfair sends back updates whenever an order placement/cancellation/match occurs. Like its brother, the Stream API uses JSON to serialise data as opposed to a binary protocol. Messages are separated with a newline character and can be either an image (the full order book state) or an update (a delta saying which levels in the order book have changed). The Stream API can also be used to subscribe to the state of user's own orders in order to be notified when they have been matched/cancelled.

This was pretty cool, because it meant that my future market making bot would be able to react to market events basically immediately, as opposed to 200ms later, and wouldn't be liable to get throttled.

Here's an example of a so-called market change message:

{"op":"mcm","id":2,"clk":"ALc3AK0/AI04","pt":1502698655391,"mc":[

{"id":"1.133280054","rc":[

{"atb":[[5.7,5.7]],"atl":[[5.7,0]],"trd":[[5.7,45.42]],"id":12942916}]}]}

This one says that in the market 1.133280054, we can't bet against (lay) the runner 12942916 at odds 5.7, but instead can bet up to £5.70 on it. In addition, now the total bets matched at odds 5.7 amount £45.42 (actually it's half as much, because Betfair counts both sides of the bet together). In essence, this means that there was an aggressive lay at 5.7 that got partially matched against all of the available lay and £5.70 of it remained in the book at available to back, basically moving the market price.

As for the other parts of the message, pt is the timestamp and clk is a unique identifier that the client at subscription time can send to Betfair to get the whole market image starting from that point (and not from the point the client connects: this is used to restart consumption from the same point if the client crashes).

Another program had to be added to my Daedric pantheon. Sanguine, "whose sphere is hedonistic revelry, debauchery, and passionate indulgences of darker natures", would connect to the Stream API, subscribe to the order book stream for a given market and dump everything it received into a text file. It was similar to Hermaeus, except I had given up on creating a proper schema at this point to store the data into PostgreSQL (and even in the case of Hermaeus it was just the metadata that had a schema, the actual order book data used the json type). Still, I was able to schedule instances of it to be executed in order to record some more stream data dumps.

And indeed, the data now was much more granular and was actually possible to reconcile (except for a few occasions where some bets would be voided and the total amount traded at given odds would decrease).

Here's some code that, given the current amounts available to back, lay and traded at given odds, as well as a new order book snapshot, applies the changes to our book and returns the events that have occurred.

def process_runner_msg(runner_msg, atb, atl, trds):

events = []

# represent everything in pennies

back_diff = {p: int(v * 100) - atb[p] for p, v in runner_msg.get('atb', [])}

lay_diff = {p: int(v * 100) - atl[p] for p, v in runner_msg.get('atl', [])}

trd_diff = {p: int(v * 100) - trds[p] for p, v in runner_msg.get('trd', [])}

for p, v in trd_diff.iteritems():

if not v:

continue

if v < 0:

print "ignoring negative trade price %f volume %d" % (p, v)

continue

if p in back_diff and back_diff[p] < 0:

back_diff[p] += v / 2 # betfair counts the trade twice (on both sides of the book)

atb[p] -= v / 2

trds[p] += v

events.append(('PLACE_LAY', p, v / 2))

elif p in lay_diff and lay_diff[p] < 0:

lay_diff[p] += v / 2

atl[p] -= v / 2

trds[p] += v

events.append(('PLACE_BACK', p, v / 2))

elif p in back_diff:

back_diff[p] += v / 2

atb[p] -= v / 2

trds[p] += v

events.append(('PLACE_LAY', p, v / 2))

elif p in lay_diff:

lay_diff[p] += v / 2

atl[p] -= v / 2

trds[p] += v

events.append(('PLACE_BACK', p, v / 2))

else:

print "can't reconcile a trade of %d at %.2f" % (v, p)

# these were aggressive placements -- need to make sure we're sorting backs (place_lay) by price descending

# (to simulate us knocking book levels out) and lays vice versa

events = sorted([e for e in events if e[0] == 'PLACE_LAY'], key=lambda e: e[1], reverse=True) + \

sorted([e for e in events if e[0] == 'PLACE_BACK'], key=lambda e: e[1])

for p, v in back_diff.iteritems():

if v > 0:

events.append(('PLACE_BACK', p, v))

elif v < 0:

events.append(('CANCEL_BACK', p, -v))

atb[p] += v

for p, v in lay_diff.iteritems():

if v > 0:

events.append(('PLACE_LAY', p, v))

elif v < 0:

events.append(('CANCEL_LAY', p, -v))

atl[p] += v

return events

Parallel to this, I was also slowly starting to implement an Exchange Stream API client that would subscribe to and handle market change messages in Scala. This contraption, named Azura (whose sphere is "the period of transition and change"), would form the base of the actual live market making bot and/or any other trading strategies that would use the Stream API.

Conclusion

Next time on project Betfair, we'll start implementing and backtesting our market making bot, as well as possibly visualising all the data we collected.

As usual, posts in this series will be available at https://kimonote.com/@mildbyte:betfair or on this RSS feed. Alternatively, follow me on twitter.com/mildbyte.

Interested in this blogging platform? It's called Kimonote, it focuses on minimalism, ease of navigation and control over what content a user follows. Try the demo here and/or follow it on Twitter as well at twitter.com/kimonote!